Within the last year, artificial intelligence (AI) has evolved from a buzzword to an expectation. But with its immense power, AI brings a unique set of responsibilities. The question is, are you prepared to fully leverage AI’s potential while effectively managing its risks?

In our recent The AI revolution is here. Are you ready to manage the risks? thought leadership webinar, David Tattam, Chief Research and Content Officer, and Michael Howell, Research and Content Lead at Protecht, explored AI’s impact across industries and its implications for enterprise risk management, including the operational risks, the ethical implications, and the looming regulatory landscape of AI.

This blog discusses the audience polls and the questions asked at the webinar. If you missed the webinar live, then you can view it on demand here:

Poll results

Here are the results of the surveys across the Asia-Pacific, North America and EMEA regions. Let’s look at our two survey questions together.

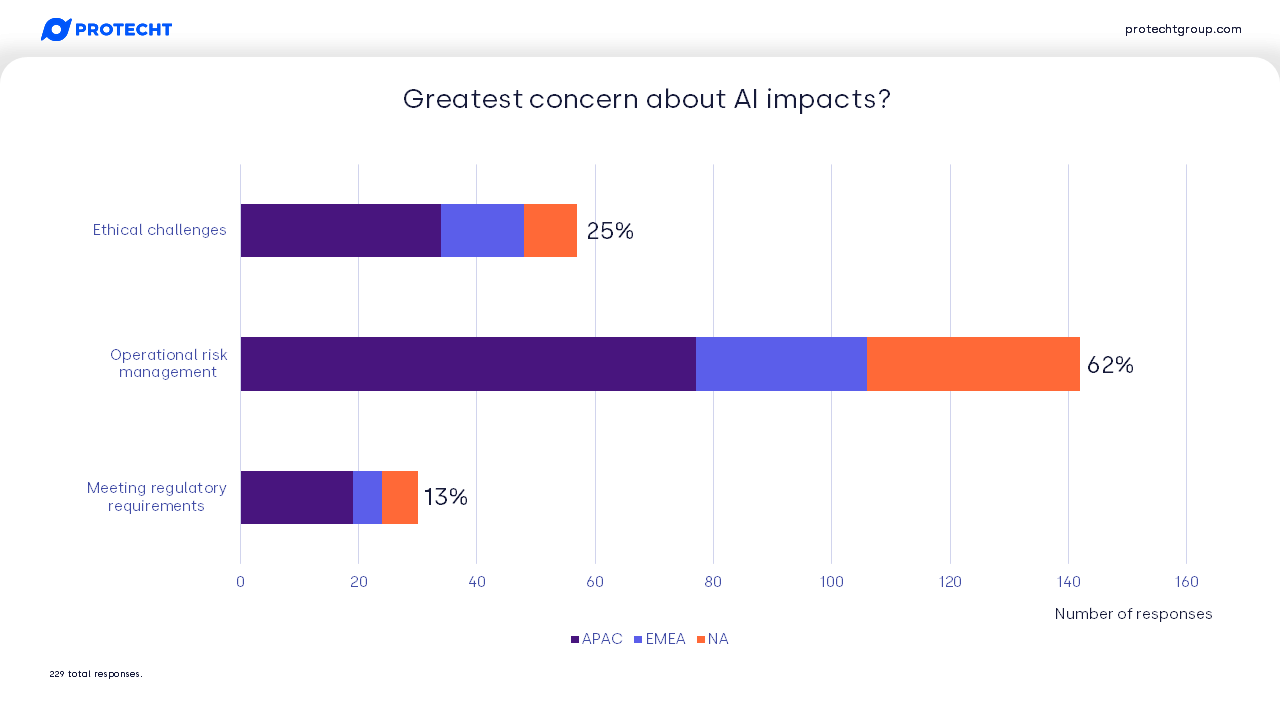

What’s your greatest concern about the impact of AI within your organisation?

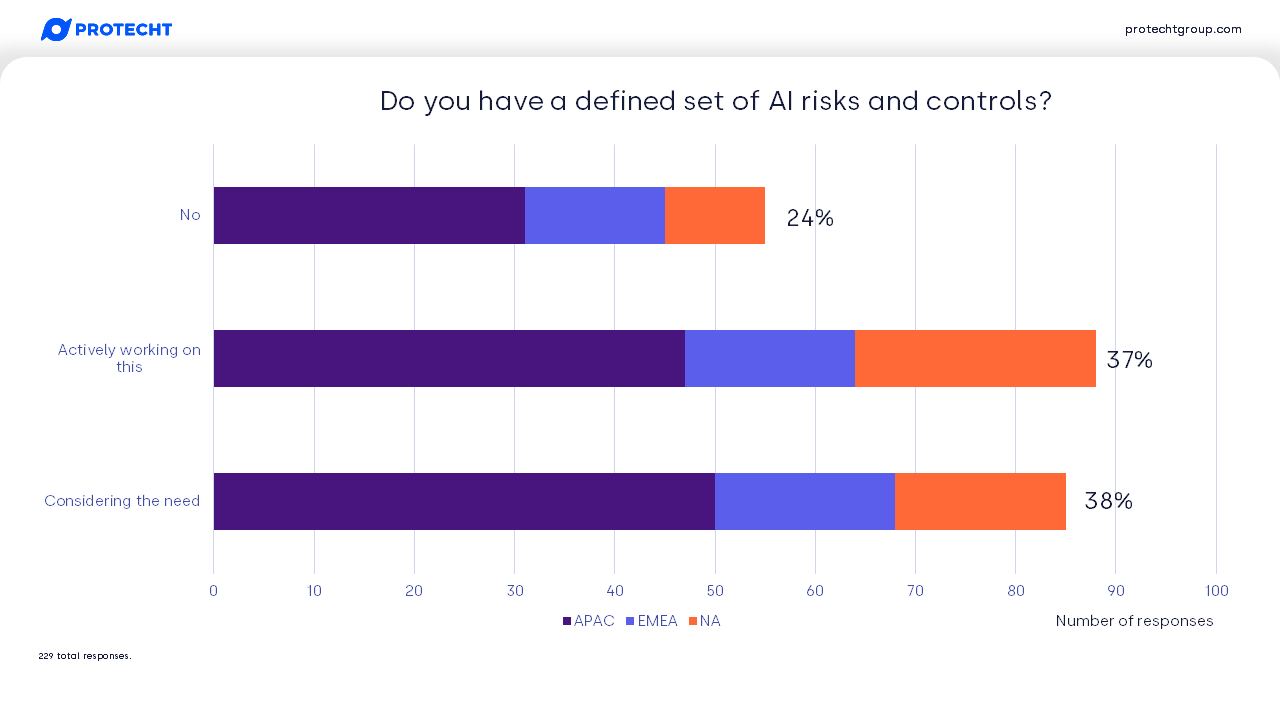

Does your organisation have a defined set of risks and controls for AI?

Operational risk was the clear frontrunner across all regions as the biggest concern – but seems disconnected from having defined risks and controls for AI. This might be explained by those who are concerned and still considering the best approach. It implies that you need good systems and structures in place, and aligned with our discussion on governance, clear ownership for management of risks and controls through an enterprise risk management platform.

Questions

Q1: How do you identify tasks that are outside the 'jagged frontier' of AI capabilities?

Q2: Will AI learn emotion and feelings?

Q3: Can you explain the risk and reward framework model in more detail?

Q4: What are the uses and risks associated with using AI in general insurance?

Q5: Are you two even real people?

Q6: What are your thoughts on the alignment of AI with ESG requirements?

Q8: How can we incorporate AI in our business-as-usual risk management tasks?

Q15: Why is the bowtie so important a tool for Protecht?

Q19: What about the financial industry using generative AI?

Q21: Might you have a sample AI policy that you could kindly share?

Q1: How do you identify tasks that are outside the 'jagged frontier' of AI capabilities?

We assume these questions reference the “Navigating the Jagged Technological Frontier” report, which was released in September 2023. The researchers used GPT-4, which they state was the most capable model at the time of the experiments.

There were challenges with the researchers defining tasks that sat inside and outside the frontier – which may also foreshadow the difficulty for users of AI to know whether a task they are asking it to perform is inside or outside the frontier.

You can read the report itself here which provides details of the tasks, and we wrote a blog that summaries our thoughts.

Q2: Will AI learn emotion and feelings?

That is a big question! This has been outside of the scope of our research, but here is a quick hypothesis. The AI we develop may be modelled on the human brain, but what we don’t bring along with it are the biological factors inherent to humans. AI won’t get tired, hungry, or be impacted by other factors that influence how humans feel (unless that is programmed in). Perhaps they might mimic the related behaviours due to the way they learn and the data they are trained on. It does lean into lots of interesting ethical considerations – such as what the boundaries may be for companion robots.

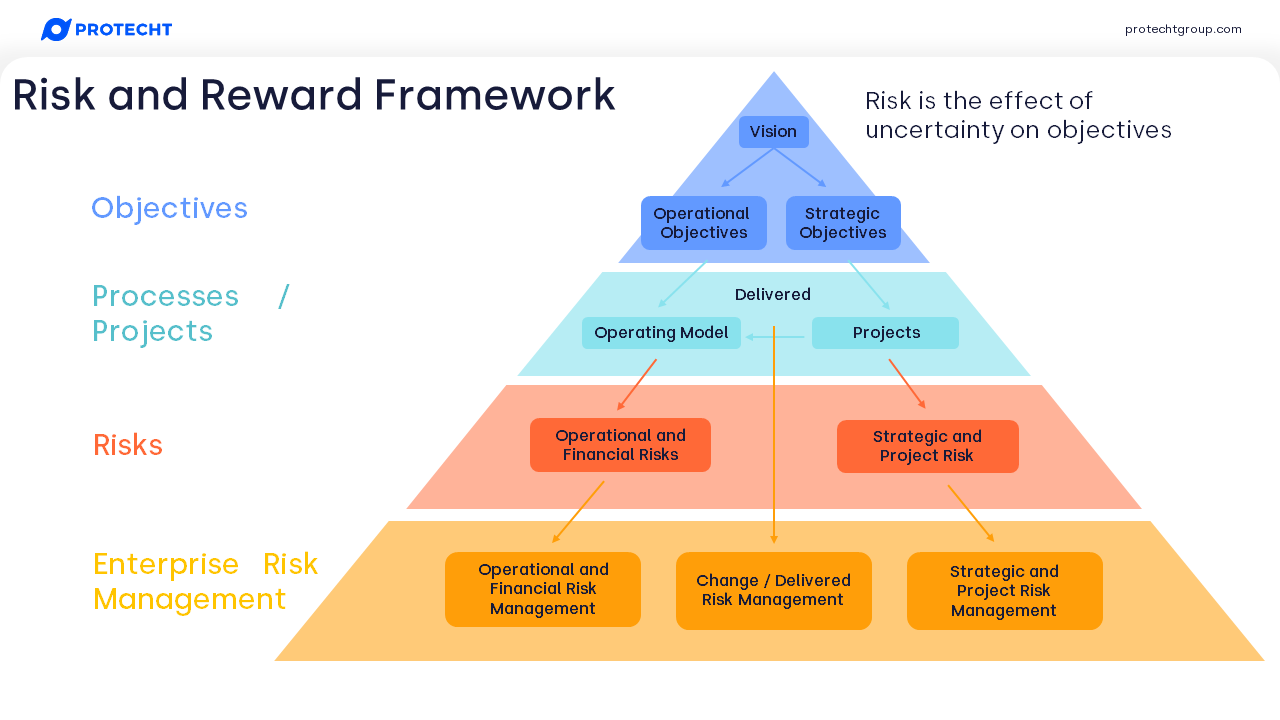

Q3: Can you explain the risk and reward framework model in more detail?

For reference, here is our risk and reward framework, which is foundational to how we think about the link between risk and objectives at Protecht:

Let’s stick with our AI examples. Firstly, consider strategic risk of AI. Your strategic direction may need to change with the proliferation of AI tools. Let’s assume you decide to ignore AI and continue running the same operating model – perhaps for a handful of years until your customer base disappears as they migrate to competitors who have embraced AI and can offer the same products faster, more effectively and cheaper. This is an example of a strategic decision risk that ends up undermining your objectives (at least, assuming one of your objectives is to stay in business!).

Let’s step back and flip that story. You put in place a strategy to integrate AI into your operations, and to the products you provide to customers. You assemble a project team, and you now have a strategic project, one that is designed to deliver change into the operating model. Firstly, there is strategic execution risk (or project execution risk). These are risks that would result in the benefits, costs or schedule of the strategy being outside of acceptable limits. Second are delivered risks. These are operational risk that are delivering into the operating model by the project. For example, some of the security risks we outlined in the webinar would be introduced or change. The best way for these operational risks and appropriate risk treatments and controls – to be considered is during the project, not after completion. This is delivered risk management.

Finally, we have the operational risk that, even if you don’t change your operating model, will continue to evolve. We discussed some of these in the webinar, such as how AI is changing fraud and cyber risks. This brings us down to the final layer, where enterprise risk management takes place. This is where our Protecht ERM system is designed to help you cater to all your risks (not just AI), and support achievement of the objectives at the top of the pyramid.

If you’d like to get more intimate with this framework, we recommend our ERM – Bringing It To Life course.

Q4: What are the uses and risks associated with using AI in general insurance?

Here are a few ways that narrow AI is likely already being used in the general insurance sector:

- Automated claims processing – This can include AI assessing and validating evidence provided by customers, estimating damages based on that evidence, and streamlining the process

- Fraud detection – Anomalous patterns in customer behaviour, either individually or over time, can highlight potential fraud

- Personalised products – Products can be tailored to individual customer needs based on analysis of the customers history and preferences, which can address regulatory pressures to ensure customers are not provided products they do not need

- Predictive analytics – Analysing internal and external data sources to identify shifting trends in claims, integrated into existing actuarial, capital reserves and reinsurance processes

These also introduce risk. If not governed properly, AI used to personalise products or for predictive analytics (which might influence pricing) can introduce bias that may be considered unfair or discriminatory and fall foul of consumer regulation. As with many AI implementations, the security and use of peoples’ personal information is also critical.

The biggest risk is one that already exists for insurers, model risk. If the AI model makes errors on critical functions such as pricing and forecasting claim volumes, those errors could have significant implications for financial stability.

Q5: Are you two even real people?

After consulting with our creators – sorry, parents – we’ve been assured that we are indeed real!

Q6: What are your thoughts on the alignment of AI with ESG requirements?

I’ll assume this is referring to the potential cost of running AI, not just in economic terms, but the environmental impact and whether they are sustainable. Training a model soaks up a huge amount of power which produces carbon, while using water to keep the chips cool.

If we consider large language models like ChatGPT, their usage also requires a lot more power compared to an internet search. Reports vary, but it could be 30-60 times, though this will depend on individual models.

Depending on your chosen use case (which may itself be informed by your environmental strategy), you might make careful selection of sustainable data centres, using AI itself to optimise energy consumption, or limit use of AI to specific use cases where it adds the most value or justifies its use by increasing other efficiencies that offset the environmental impact.

More broadly, you should review how AI impacts on other ESG factors important to your organisation or your stakeholders. For example, you may need to conduct careful planning of workforce impact when implementing AI, such as worker displacement or requirements to re-skill your workforce.

Q7: Do you agree there is a risk of reduction in human understanding of a problem (reduced skill) where models are used as a default?

This was another interesting observation from the Jagged Frontier report mentioned in the webinar. The only way people develop sufficient knowledge in a specific domain is to effectively work their way up through that domain, developing experience along the way. This allows you to appreciate when to challenge the output of AI, or even when to avoid its use for tasks it may not be good at.

This could become a systemic issue in the long term, both within organisations and more broadly. For some organisations who have made teams redundant and retained minimal in-house expertise, I’m curious to see the longer-term impacts.

Q8: How can we incorporate AI in our business-as-usual risk management tasks?

We broke down AI for risk management into two streams; narrow AI, which is usually developed for a specific use case, and is mostly used to manage a specific risk. Examples include credit risk scoring, anti-money laundering, or cyber threat detection.

The others are example of broader enterprise risk management practices, which may be better served with effective use of generative AI. Here are those we included in the webinar:

- Identify components of risk

- Identify potential controls to manage a risk

- Tailored analytics

- Assess the size and implications of risk

- Develop scenarios and pre-mortems

- Develop control testing plans

- Streamline incident reporting

- Analyse incident data

- Synthetic data creation

- Identify and compare risk response strategies

There are of course many more specific applications. Let’s provide a more practical framework for how you might implement one or more of these:

- Identify a process that might benefit from the use of AI

- Define the benefits you are expecting; efficiency and improved quality of data are the most common, but make sure your use of AI has a purpose

- Outline how and where in the existing process to produce those benefits

- Run a pilot to validate those benefits are achievable and consistent. For large language model tasks, you might develop a sequence of prompts for specific tasks to produce that consistency.

- Review your current workflow and procedures for the specific task, and update those processes where necessary

- Provide training, communication and implement other change management practices to embed the process

- To the extent necessary, implement quality control processes and continuous improvement

More detailed steps will of course depend on the chosen use case. These should all be supported by policies and procedures that enable people to use AI in those processes.

Q9: How can we manage risk from AI tools developed by other organisations that we might be forced to use?

This will be essential to manage some of the risks we outlined in the webinar. First and foremost is the safety of your confidential data, and personal data of your employees or customers. Second is the quality of the data you receive from the vendor if it is output from an AI model. I expect we will see a lot of scrutiny in vendor contracts in the next few years regarding how data is used in AI products, and how those vendors govern the use of their AI models. (check those ‘research’ clauses).

A related risk is the evolution of shadow IT to shadow AI. This can be your people using AI under your nose – but also your vendors. By way of example, consider an outsourced contact centre that handles email correspondence on your behalf. An entrepreneurial manager decides to make operations more efficient by feeding them into an external large language model – and the model now gets trained on not only your proprietary data, but your customers personal information.

As part of your vendor management, you will need to set out your policy of what you expect from your vendors and assess the risks they pose. As part of your due diligence, you will need to ask questions related to how they use AI, what governance and controls they have in place over it, and how they will provide ongoing assurance. Our Vendor Risk Management module is designed to help you efficiently and effectively oversee these vendor relationships.

Q10: How would you encourage boards, executives, and employees to act on AI risks to our organisation?

Acting on risk is about awareness. This includes awareness of risk management practices and desired behaviours in your organisation, as well as raising awareness of the specific risks – in this case those related to AI. We recommend aligning a top down and bottom-up approach to risk management. Top down, the board and management define and communicate their expectations. Bottom up, enable people at the coalface to have reporting mechanisms to escalate risks that might require attention.

It’s key to remember that people want to use AI (whether you have an official policy or not) because they see benefit in doing so. Running training and awareness sessions will help them use it safely while reaping those rewards.

Q11: What is the relationship between AI risk management, model risk management, and excess connection technology risk management?

AI risk management is essentially model risk management, and many of the governance and control expectations can be extended to AI models. Excess connection technology risk isn’t a term I’ve heard before and doesn’t appear common – but perhaps is an emerging term.

Our IT Risk Management eBook has more to say about governance and control of technology-related risks, including AI.

Q12: How can educational institutions deal with students cheating by using the artificial brain rather than their own?

This reminds me of a science article I read over a decade ago, after the internet had been around for several years. The key message – the way people learn was changing. At that time, we were moving from remembering specific information to remembering where and how to find information. The proliferation of generative AI may be another evolution of that. I’m not sure what that will look like exactly - in an ideal world I’d like to see it evolve into a bigger focus on critical thinking, but that might be optimistic.

I have a daughter in primary school, and I too wonder about the future of education. What skills is she going to need in 5-10 years’ time? How is the education system changing to deliver those? I’ve heard of some higher education institutions banning the use of AI, while others advocate for its use. Maybe education could evolve into assessing critical thinking (perhaps observing how students think about problems and use their tools), rather than looking at the specific answers.

AI is not going away. It is the widespread distribution and availability of knowledge, and the internet was the widespread distribution and availability of technology. Rather than thinking how AI could mess up the current way we educate our children, we need to think what education looks like accepting that AI is here to stay and tool that is available for use. Embrace it, don’t run away!

To the broader point of societal trust, this is another systemic risk of AI we tangentially touched on when talking about deepfakes. Misinformation – and the ease with which it can be created – is becoming prominent and could cause us all to inherently mistrust information provided to us. Another reason to focus on critical thinking!

Q13: I would love to become an expert on AI risk. Do you have any tips on where to start this journey?

My highest recommendation goes to The Memo newsletter from Dr Alan Thompson at LifeArchitect. The newsletter commonly covers some technical information about AI models, some observations on regulatory developments and policy, and lots in between.

While Dark Reading is primarily about cybersecurity, it does cover AI risks, and occasionally related regulation. While some of these may be more technical in nature, it helps highlight how AI risks could unfold if not managed well.

If your organisation has regulatory newsfeeds, you may also be able to filter them for AI-related regulations, introduction of bills etc. If you receive these newsfeeds as part of your Protecht ERM subscription, then please contact your customer success representative for more information on how we can help.

Q14: Won't AI activity and business go to where there is no regulation, much as some say carbon regulations are offshoring high energy industries?

This will depend on the approach taken by regulators. While there seems to be a principled-based and collaborative global approach from most regulators, time will tell as these regulations come into play. However, whenever we have the interplay between regulators and humans, we will always have humans who wish to operate outside of regulation and there will always be a “dark” place to operate!

Q15: Why is the bowtie so important a tool for Protecht?

We think the risk bow tie is a great visual communication tool for how risks can unfold. It can allow non-risk people to see the pathways and how risks can play out, highlight potential control gaps, and demonstrate the linkages between the different components of risk and risk management. You can find out more in our risk bow tie eBook and risk bow tie Protecht Academy course.

Maybe when we all have brain implants, we will visualise risk in different ways!

Q16: Interested in the concept you outlined of AI stress testing – how can we put that concept into action?

We didn’t get much time to mention the use of AI in stress testing – yet another potential use case for AI. This could involve the creation of a range of stress scenarios and the potential link to your processes and risks to demonstrate how you would perform under that stress. This is the essence of resilience. We will consider this for a future webinar but would like to see capability on this field progress before we do so we can bring something really tangible to the table.

Q17: Organisations should have the policy and protocols in place before initiating an AI program as delaying this process could prove very costly with negative financial and customer impact.

We agree. To us, this is the power of the risk / reward model that we covered. Strategy and risk management need to be working in tandem. Pursue AI and run experiments or pilots, but do them safely.

Q18: Do you have any comments on the challenges with accuracy of the results received from AIs, such as Google’s recent problems with chatbot images?

I assume this in reference to recent issues with Google’s Gemini model. This is a problem given the inherent way that models are built. As they are based on probabilistic models (what is the most likely next set of words, or image that might match this prompt), they can inherently include stereotypes and reinforce bias that was in the training data.

If we look at text models, it’s basically auto-predict on steroids. It is looking for the ‘best next words’ based on its training and the context you provide it, not the actual answer or response. This may sometimes result in plausible sounding answers (collections of words or paragraphs that read well) but may not be accurate. You may have heard of the lawyer who cited cases based on his interaction with ChatGPT – but the cited cases did not exist.

Most providers of these models have been making efforts to include more diversity in the responses, but this may overcorrect. I don’t envy those who have to walk this tight line! Of course, this also shows that if you do develop your own model (or fine tune one on your data), you need to be test thoroughly, and have the appropriate expertise.

Q19: What about the financial industry using generative AI?

There are a lot of opportunities in financial services for generative AI. We covered some of the risk management use cases. Here are a few opportunities:

- Tailored customer service support that takes into account previous interactions

- Customised marketing and delivery of products and services to the individual

- Customised education for customers on using products and services

- Data integration and analysis from various data sources through plain language enquiries

There are many more. Of course, these bring some risks as well that will have to be managed. While marketing could be tailored, it will have to remain fair, compliant, and avoid predatory practices.

Q20: The impact of AI will be very different across the globe – any insights into how this is being mapped out?

This one is only on my periphery. There is certainly a contingent of people (AI specialists, policy makers etc) who hope that as the technology develops, particularly as we approach the potential for Artificial General Intelligence, that all of humanity will benefit. Some countries simply don’t have the capacity or capability to compete in model development. Countries that suffer from poverty, famine and poor living conditions may have the most to gain from ethical application of AI to solve those regional problems. Some also promote a universal basic income, on the assumption that not everyone will be required to work at some point in the future.

Q21: Might you have a sample AI policy that you could kindly share?

We don’t have one to share at this stage, but it’s a good idea. We’ll keep you posted on the work we’re doing in this area to provide resources, both as downloadable content and within Protecht ERM.

Next steps for your organisation.

This blog was based on the audience questions and survey responses from Protecht’s recent The AI revolution is here. Are you ready to manage the risks? thought leadership webinar which explored AI’s impact across industries and its implications for enterprise risk management, including the operational risks, the ethical implications, and the looming regulatory landscape of AI.

The webinar covered:

- AI in action: How are forward-thinking organisations using AI to fuel growth?

- Balancing act: Risk vs reward in the world of AI – how to strike the right balance.

- Regulatory landscape: Navigating the complex field of AI regulation – what you need to know.

- AI governance: Who should be at the helm of managing AI risks in your organisation?

If you missed the webinar live, then you can view it on demand here: